Toward a future without fraud

A collaboration between Which? and Demos Consulting.

Download our full report:

pdf (3.05 MB)

There is a file available for download. (pdf — 3.05 MB). This file is available for download at .

Executive summary

Since 2017, successive governments have stated an ambition for the UK to be the safest place in the world to be online. Yet, the UK has been described as the scam capital of the world and UK consumers are being targeted by fraudsters from across the globe. The scale of the harm caused – much of which occurs on social media platforms – is sobering. Nearly one in ten adults in the UK have been a victim of fraud [1], and in 2021 victims lost £2.6bn [2].

Which? and Demos Consulting spent 12 months looking into adverts for investment products, in order to study the potential scale and character of misleading and fraudulent adverts. We collected and analysed over 6,300 adverts from Meta’s ‘Ad Library’. This is a publicly available tool which shows, for a given country, adverts visible to users of Instagram and Facebook. The adverts have been approved by Meta to be displayed on its platforms. 1,064 of these adverts were analysed by a team of researchers, and labelled for whether they met a number of ‘risk flags’ – for example if an advert claimed returns were ‘guaranteed’ – designed to indicate potentially misleading content.

Within the adverts we coded, we found 484 adverts for investment products or services, of which 89 raised three or more serious risk flags. Potentially misleading adverts often promised massive, risk-free and speedy returns, playing on consumers’ fears of missing out on opportunities.

In order to test whether these flags could be applied automatically, we also conducted automated analysis on the whole dataset, training a series of algorithms to label adverts according to their content. Our experiments with automated labelling showed that it is possible to use algorithms to help identify misleading content; while the systems we trained had a high margin for error, this type of labelling is likely to be vital in helping ad tech platforms use the vast data resources at their disposal to successfully tackle misleading and fraudulent adverts.

This research reinforces the case for strong regulation around online harms, further sharpening the imperative for the Online Safety Bill to be passed into law without delay. It demonstrates the value of transparency from platforms on the advertising shown to their users, and the need for all ad tech platforms to work closely with the Financial Conduct Authority, other expert stakeholders and each other to combat this shared problem. Finally, it suggests that stronger due diligence processes, requiring advertising companies to know who is buying space on their platforms, are likely to be necessary to supplement automated and human detection.

Introduction

Fraud is now the most prevalent form of crime in England and Wales, with 6.6% of people aged 16 or over reported as being victims of fraud in the year to June 2022 [3]. In 2021 victims lost £2.6bn to fraud [4]. In addition to the financial cost, this has a significant human cost: being the victim of a scam is associated with lower levels of happiness and life satisfaction, and greater anxiety. Which? estimate the harm from lost wellbeing attributable to online scams amounts to £7.2 billion a year [5].

Most of this fraud is cyber-enabled [6], and often perpetrated through online advertising [7]. Bad actors post fraudulent adverts online which can lead to a scam: for example, advertising for fake investment products. Consumers are presented with glamorous images encouraging them to invest in products like cryptocurrencies, only to find that the website they invested through and their contacts there disappear without trace [8].

The UK has a number of laws to tackle scams including wide ranging fraud legislation, strict regulation of the advertising of financial products and legislation to protect consumers from misleading adverts [9]. However, outrageous levels of fraud suggest that these rules are routinely breached online. Platforms have their own policies and primarily automated processes to tackle fraudulent advertising. But Which? has found a wide variety of scam adverts online, including adverts for cryptocurrencies that appear to be endorsed by a celebrity, adverts that suggest they’ll help you claim a tax rebate and adverts that pretend to offer tech support [10]. This shows current systems are not sufficient to protect consumers.

There is an urgent need for more comprehensive action and a holistic approach to tackle online scam adverts. The Online Safety Bill must be passed into law as soon as possible, and the government should bring forward further legislation to tackle misleading adverts and scam adverts across the rest of the web through the Online Advertising Programme. For this regulation to succeed, platforms and other stakeholders will need to work together, sharing data and insight, to understand how we can best protect consumers from the ever-evolving web of online fraud.

Scammers are stealing hundreds of £millions from innocent victims every year - sign our petition if you want to see better protection for scam victims

This report

This report seeks to explore how, in practice, we can protect consumers from misleading and fraudulent online advertising. It presents results from a collaborative project by Which? and Demos Consulting, investigating the nature of investment advertising across Meta platforms – including Facebook and Instagram – using Meta’s public Ad Library. The report details sophisticated examples of shady adverts putting consumers at risk, including those that are potentially fraudulent. We explore the ways in which adverts can mislead consumers and the potential to apply a risk-based approach to identifying these adverts to be automated and applied at scale. We strongly believe that platforms have an opportunity to create a continuously improving anti-fraud model which combines due diligence at the point of advertising account creation, improved identification of problematic content, as explored in this research, and accessing external data sources of known fraud red flags, such as Financial Conduct Authority (FCA) held data. This report sets out our initial understanding of how improving identification of problematic content could reduce consumer harm by tackling the issue of misleading and fraudulent advertising at scale.

This research could have been conducted on any type of risky adverts. Investment adverts were chosen as investment fraud is increasingly common [11] and is associated with significant financial losses. This area has also already been identified by both platforms and regulators as a cause of particular concern [12].

- Section 1 of the report provides an overview of the issue of scam investment adverts and the current regulatory context.

- Section 2 describes the methodology we’ve taken to study this problem at scale.

- Section 3 provides a description of the adverts we collected from Meta’s ad library, and the nature of the investment products and services they are advertising.

- Section 4 deepens this analysis to explore the risks these adverts pose to consumers.

- Section 5 builds on the risk framework established in Section 4, to assess the practicality of assessing the risks posed by investment adverts using machine learning technologies.

- Section 6 presents our reflections on this work and makes recommendations which could help protect consumers from scams and other high-risk adverts on online platforms.

1. The regulatory context

The regulation of investment advertising online

Online advertising regulation in the UK is undertaken through a combination of different pieces of legislation, regulation, self-regulation, industry standards and compliance with advertising codes.

There are two important pieces of legislation currently in place. Advertising must not constitute an offence under the Fraud Act 2006 which outlaws fraud, nor an offence under the Consumer Protection from Unfair Trading Regulations 2008 (CPRs) which outlaws unfair and misleading trading.

In practice, advertising (including online advertising) is regulated through the UK Code of Non-broadcast Advertising, Sales Promotion and Direct Marketing (CAP Code) [13] administered by the Advertising Standards Authority (ASA). The CAP code mirrors the principles set out in the CPRs.

Given the specific risks, and technical nature of financial products, specific regulations apply to their advertisements. The regulation of financial promotions and adverts specifically, including those for regulated investments, is overseen by the Financial Conduct Authority (FCA) [14] under s137S of the Financial Services and Markets Act 2000. These rules are particularly important as regulation of financial promotions is the main tool available to the FCA to protect consumers from high-risk investment products which fall outside of the regulatory perimeter [15]. Even then, there are many types of investment which are not financial products, like property, land, wines and spirits, and commodities. These generally fall outside of the FCA’s remit [16] and are regulated by the wider CAP, CPR and Fraud Act rules which apply to all advertising [17].

Online platforms are not effectively held to account for inappropriate financial promotions found on their platforms due to an exemption in the financial promotions regime where they have acted as a ‘mere conduit’ of an advert for a financial product [18]. The FCA has called for more powers over online platforms promoting financial products [19].

Both HM Treasury and the FCA are seeking to strengthen rules around financial promotions of high risk investment products. The government recently announced its intention to strengthen the rules on misleading cryptocurrency adverts [20], and the FCA are introducing additional friction into the process of sales of high-risk investments to prevent firms selling inappropriate products to unwitting consumers [21]. In addition, the Financial Services and Markets Bill introduces proposed new powers to bring ‘digital settlement assets’ into regulation [22], which would be likely to cover a number of unregulated cryptoasset products and services in due course. However these activities will not affect the promotion of investment activities which may still fall outside the regulatory perimeter in the meantime, or stop fraudulent firms from perpetrating investment scams.

Self-regulation of investment advertising by tech companies

Major tech firms, specifically Meta and Google, control a substantial portion of the digital advertising market: the CMA estimated in 2019 approximately 80% of the £14bn spent on digital advertising in the UK was spent on Google and Facebook [23]. Over half of UK display advertising revenues in the UK in 2019 were generated by Facebook (now known as Meta) [24].

Tech firms have created their own standards for advertising. These comprehensively cover the range of harms from misleading and fraudulent advertising. Meta products have advertising policies [25], community standards [26] and community guidelines [27] which set out the rules for what content is and is not acceptable to post on its platforms. These address fraud and scams in a similar way to other online harms. On Facebook, the Fraud and Deception policy outlines that users are not allowed to post:

"Content that provides instructions on, engages in, promotes, coordinates, encourages, facilitates, recruits for, or admits to the offering or solicitation of any of the following activities:

- Deceiving others to generate a financial or personal benefit to the detriment of a third party or entity through:

- Investment or financial scams:

- Loan scams.

- Advance fee scams.

- Gambling scams.

- Ponzi or pyramid schemes.

- Money or cash flips or money muling.

- Investment scams with promise of high rates of return." [28]

There are also policies banning advertising illegal products or services [29], misleading claims [30], and prohibited financial products and services [31].

These purported bans, however, have historically failed to turn into meaningful action by tech companies to tackle fraud [32]. Meta announced in December 2021 that it intends to prevent firms which are not registered with the FCA from advertising financial services and products on its platforms [33], a change which would bring Meta into line with recent policy changes made by Google [34]. Meta has committed to introducing this by the end of 2022 [35], and as of 20 October 2022 stated that the roll out of this process was in progress [36]. It is unclear, however, when this will be complete, and in the process of this research project we found numerous examples of adverts for regulated products from unregulated providers being displayed to Meta users in July 2022. The FCA has expressed frustration at the delay, suggesting that in combination with tighter policy on investment ads on other platforms, this has led to a surge in scams on Meta platforms [37].

Meta enforces its policies primarily through automated systems that review adverts before they begin running on its systems. This is supplemented in some instances by human review [38]. Many platforms have had notable success using automated systems to remove content related to the most serious harms, including child sexual exploitation and terrorism, with much of this effort revolving around sophisticated image and video detection [39]. However, the nature of fraudulent advertising poses a specific challenge which will require a different approach: our experience during this research suggests that any database of media used in fraudulent ads would contain primarily anodyne stock images, designed to blend in with the rest of the platform.

Automated detection is crucial in enabling platforms to filter through the vast quantities of data they handle to remove misleading and fraudulent advertising. Coupled with the contextual data available to platforms on the behaviour of advertisers, we believe there is substantial room for improvement in the way Ad Tech platforms detect and remove content. At present, too many fraudulent adverts make it through to consumers.

The Online Safety Bill

The Online Safety Bill seeks to introduce a new regulatory framework for online harms with the stated purpose of making the UK ‘the safest place in the world to be online’.

After campaigning from Which? and others, the Government included fraudulent advertising within scope of the Bill when it was introduced into Parliament in March 2022. It creates a duty for large social media platforms and large search engines to prevent their users from encountering fraudulent adverts. This presents a large step forward in ensuring that consumers are protected from fraud. It means that platforms are required to introduce proportionate systems to stop fraud before it reaches consumers. This should include due diligence checks alongside improvements in content scanning as discussed in this research.

The Bill defines fraudulent adverts according to whether the content amounts to one of a number of offences deriving from the Fraud Act 2006, Financial Services and Markets Act 2000 and Financial Services Act 2012. There are still some questions about how platforms will identify if content meets some of these offences.

Regardless of this issue, there is a clear and pressing need for better protection for consumers and others online. The Online Safety Bill must swiftly conclude its passage through Parliament and be passed into law as a matter of urgency.

The Online Advertising Programme

Alongside the Online Safety Bill the government consulted on wider ranging reform for the whole of the online advertising ecosystem. It included the advertising intermediaries that operate programmatic advertising outside of social media platforms and search engines and are outside of the Online Safety Bill. It also looks at harms from misleading advertising which also is not covered by the Bill.

The Government asked whether improved self regulation would be sufficient to tackle the harms arising from online advertising or whether there is a need for a regulatory backstop or for full statutory regulation. Research commissioned by Which? has shown that scammers freely operate in advertising outside of the major platforms [40].

There is no clear regulator for tackling scams in online advertising.

- The ASA operates a Scam Ad Alert system to inform platforms and ad networks of obvious scams but the ASA is not constituted, resourced nor has the expertise to tackle criminal actors online [41].

- The FCA tackles misleading advertising for regulated financial products and the firms that offer them but has no power over unregulated investments or other types of scams.

- Law enforcement has the powers to investigate and arrest scammers but cannot ensure that platforms and intermediaries take the steps necessary to protect consumers from these scams in the first place.

In practice, this means that publishers, including tech companies, are unlikely to be liable for the content of paid-for advertising they allow advertisers to show their users. This means there is little legal incentive or threat for online platforms to prevent adverts that can lead to a scam from appearing online in the first place.

The Online Advertising Programme presents an opportunity to address the entirety of the ecosystem complimenting the work started in the Online Safety Bill and fill the holes in the current system. Equivalent protections against fraudulent adverts to those in the Online Safety Bill need to be put in place to cover advertising across the internet. The programme should also be used as an opportunity to ensure that platforms and intermediaries (such as advertising technology providers) [42] are held responsible for misleading advertising on their services that breaches the Consumer Protection from Unfair Trading Regulations 2008. If platforms are only held responsible for adverts that clear the high bar of being obviously fraudulent then potentially harmful adverts that mislead consumers and take them on a journey to fraudulent outcomes will continue to spread online.

In this section we have set out the weaknesses of the current regulatory frameworks to protect consumers from misleading and fraudulent online advertising. For the remainder of this report, we explore in greater detail what misleading and fraudulent investment advertising can look like, and how it could practically be tackled.

Summary

- Action is clearly needed to tackle fraud, as existing regulations have struggled to keep pace with technological change, with the current regime creating little legal incentive for platforms to prevent ads that can lead to a scam appearing online.

- Platforms currently have their own policies banning harmful adverts on their platforms. These are enforced through mainly automated processes which seek to identify these harmful adverts before they are published.

- While these processes have been successful in detecting and removing some of the worst types of harmful content on platforms, the disguised and ever-changing nature of fraud presents specific challenges. There is substantial room for improvement in automated processes to better identify and remove harmful adverts.

- The Online Safety Bill seeks to introduce a new regulatory framework for online harms, including offering protection from fraudulent advertisements.

- Wider changes to the regulation of online advertising are also being considered through the Online Advertising Programme. These changes create an opportunity to hold platforms and others in the advertising industry responsible for preventing misleading and fraudulent advertising reaching consumers, drastically improving consumer protection.

2. Methodology

One of the challenges in responding to misleading and fraudulent advertising online is that it can be difficult to get a sense of the scale of the issue, or the tactics used by scammers targeting people in the UK. Online advertisements are ephemeral, targeted, and consumed in isolation – each person will often be shown their own unique set of ads on their browser or social media pages, depending on that platform’s assessment of their interests (see “How does targeted advertising work on Meta platforms?” for more details). This makes it difficult to work out how best to tackle this harmful content.

Recently, however, tools have been published by social media companies, including Meta, which provide some data on the adverts shown to their users. These tools allow a rare top-down view of the online advertising industry, and brand new insight into the risks it poses to consumers.

Which? has worked with Demos Consulting to study the scale and character of investment advertising which may pose a risk to consumers on Meta platforms using the Meta Ad Library, a public repository of advertising visible to users of Meta products (such as Facebook and Instagram).

Over an eleven month period between October 2021 and August 2022, we collected and analysed 6,357 [43] adverts created by 2,759 Facebook pages and shown to users in the UK which fall into one of the following categories:

- Adverts using language found to be used in advertising for scam investment products

- Adverts containing a link to a known scam site

Not all of these advertisements are misleading or fraudulent and we detail the process used to identify potentially harmful content within this dataset below. This work, however, has given us a substantial, if non-exhaustive, collection of adverts promoting investment products across Meta’s platforms. It also provides an understanding of the risks this content poses to consumers which can help us understand how we can better protect consumers from misleading and fraudulent advertising.

How does targeted advertising work on Meta platforms?

Like many of the advertisements seen while browsing the internet, adverts on Meta platforms are not ‘written in’ to the page. Instead, every time a visitor loads a page containing ads on Facebook, or has an advert inserted into their Instagram timeline, the company decides at that instant which advert to show them. Which advert is shown depends on what Meta knows about the visitor, and how much the advertiser has paid to reach people like them.

Meta gains information on visitors from a number of sources. As well as measuring information on how people use its own products – what they pay attention to on Instagram, for example, or pages liked on Facebook – they also gather data from a broad network of non-Meta sites which host ‘Facebook pixels’ – pieces of code which can send behavioural data, such as the contents of a shopping cart, from that site back to Meta to help them build up a user’s profile [44].

Meta then allows advertisers to use this information to better target their ads, based on users’ location (from country level to within 1km of a given address), their demographics (including precise age, education level, income and life events, such as being away from home), their interests (including hobbies and taste for various alcoholic drinks) and their behaviours (including whether they are ‘engaged shoppers’, and whether they have an anniversary coming up) [45].

These targeting tools are greatly beneficial to advertisers, who can avoid wasting money advertising to people unlikely to buy their product. There is a danger, however, that they could also be used by scammers to target those most likely to be vulnerable to buying in.

Data collection

The Meta Ad Library [46] is an impressive public resource which displays advertisements running across Facebook, Facebook Messenger and Instagram, as well as on Meta’s ‘Audience Network’ tool. Like other advertising networks, this tool matches developers with empty advertising space – on their website or mobile app, for example – to companies who want to advertise there. Every time someone loads a page containing an ad, Meta runs an auction to determine what gets shown, dropping the highest bidding advertiser’s content into the available space [47]. All of this happens automatically, at the blink of an eye.

The Ad Library presents a simple interface, asking you to define a country and type of advert, then allowing you to run a keyword search returning adverts of that type shown to people in that country. Figure 1 offers an illustration of ad library search results.

Figure 1: Genuine Which? adverts from a Meta Ad Library search

For advertisements which concern ‘social issues, elections or politics’, Meta provides an Applications Programming Interface (API). This allows researchers to access data on these adverts at scale, as structured data [48]. However, for adverts which do not fall into these categories, Meta only permits access to Ad Library data manually, through the web interface shown above. This makes advertising trends challenging to study at scale, effectively making it impossible for researchers to study harmful activity taking place on its platforms at even the modest scale we have attempted here without falling foul of the platforms’ terms of service.

For the purposes of this research, Demos Consulting wrote a piece of software able to search Meta’s Ad Library and record the results in a database (a ‘scraper’). We made the considered decision to use this approach as we believe this research is in the public interest, and necessary to meet the shared objective of protecting consumers from misleading and fraudulent advertising. We were careful to minimise any negative impact of this approach, following two key principles throughout our data collection:

- To avoid the risk of degrading the service for other users, data was collected at ‘human speed’, by adding a delay of at least 5 seconds between each request to the Ad Library.

- Only data publicly viewable through Meta’s Ad Library was collected. At no point was any data collected related to individual accounts on any Meta platform.

In order to collect adverts, our program searched the Ad Library for advertisements active in the United Kingdom which met one of the following criteria:

1. Use of a relevant search term

We conducted searches using a list of 183 search terms relevant to investment. These terms were a combination of generic terms (‘invest now’, ‘risk free income’, ‘future millionaire’ etc.) and specific key terms reported to be linked to financial scams (‘easymoney’, ‘investUK’, ‘legitmoneyflips’ etc.) [49].

Over the course of the research, this list was iterated upon to include language found to be prevalent within fraudulent adverts, and exclude terms which were producing irrelevant results. Terms were also prioritised based on the risk coding, explained below, with terms found to return higher numbers of flagged adverts searched more often. A full list of all keywords used is included in the technical annex.

2. Presence of a link to a suspicious site

We also looked for links to sites known, or suspected, to contain fraudulent content. These were taken from public lists compiled by the UK’s Financial Conduct Authority (FCA) and the Financial Commission in the US, a self-regulatory organisation concerned with regulating foreign exchange investment providers. Each of these organisations provide a public blacklist of firms found to be trading fraudulently. We searched the ad library for every firm on these blacklists at the outset of the project.

We also used live data from Scamadviser, a service which generates and publishes ‘Trust Scores’ for over a million sites each month, making newly evaluated sites available through an API [50]. To investigate whether sites newly flagged as suspicious were being linked to in adverts on Meta platforms, we filtered Scamadvisor’s daily list of sites for those containing the word ‘invest’ in their site title, description or advertising keywords and assigned the lowest possible trust score of 1/100. This typically returned three to four thousand URLs each day, which were then used as search terms in the Ad Library.

One challenge encountered during this search was the fact that many adverts do not remain on the Ad Library for long. In 94% of cases we were able to collect data on when the advert was first posted. Four in ten of these adverts (42%) had been active on the platform for less than two days when they were collected.

Some of this is due to the way our scraper was designed. Where more than one page of results were returned for a given search, we prioritised collecting the most recent adverts. However, it also suggests that a large proportion of adverts on Meta quickly become unavailable.

Some of this removal is due to Meta’s own policies. While social and political adverts are preserved for transparency, Meta removes content on the Ad Library entirely once an advert becomes inactive. In the process of this work, we found that the most concerning content is sometimes removed relatively quickly but it is unclear whether this is by Meta or the advertisers themselves.

As a result, problematic content often vanishes before it can be reviewed, and regular monitoring is needed to judge the true scale of the problem. Previous research shows that scammers make large amounts in short periods of time and include the likelihood their advert will be taken down in their business model [51].

Data analysis

Figure 2: The analytical process used in this research

In order to understand the nature of and risks posed to consumers by investment ads on Meta platforms, Which? and Demos undertook a five-stage analysis process.

- A dataset of 1,064 adverts was created for manual analysis [52]. An 18-question framework coding was designed which allowed us to examine the presence of potential risk factors for consumers throughout the data set. This coding framework was designed by Which? experts, reflecting our views on factors which could potentially mislead consumers.

- Adverts were initially coded as to whether they related to a financial investment opportunity – that is, the opportunity to make monetary returns over time – or not. Some adverts were included in the data collection because they used the word ‘investment’ when the product or service on offer was not related to financial investment, e.g. advertisements for further education which described this as an ‘investment in the future’. These adverts were removed from the dataset, leaving a sample of 484 investment-related adverts.

- Adverts were then coded as to the nature of the offer, particularly whether the advert was promoting a specific investment product, or an investment-related service, and the nature of that product or service. This step was undertaken because, as set out in Section 1, different types of investments face different rules, depending on whether or not they fall within the FCA regulatory perimeter. This allowed us to understand the types of products and services advertised within the sample. Our categorisationis presented in Section 3.

- A coding framework was developed which aimed to identify characteristics of adverts which may mislead consumers. In building this framework, we considered the various rules that investment adverts should abide by to avoid misleading consumers, including the Consumer Protection from Unfair Trading Regulations 2008 (CPRs), and the Financial Conduct Authority’s Financial Promotions Rules (FPRs). All adverts should follow the CPRs, and although adverts for some types of investments are not governed by the FPRs (where the products fall outside of the FCA’s regulatory perimeter) we still consider that the requirements set out in the FPRs represent good practice to ensure that adverts for investment products do not mislead consumers. Our framework aimed to assess, through a series of closed questions, whether promotions provide information in a way that is potentially misleading to consumers, by inclusion of statements, omission of information, or general presentation of information. All 484 investment adverts in our manual sample were then double-coded using this framework, with coding reconciled where researchers disagreed. The findings of this analysis are presented in Section 4, and the full coding framework is provided in Annex A.

- To test whether automated systems could help to bring adverts to the attention of human analysts, we used Keras to train a series of neural nets, designed to algorithmically determine whether an advert mentioned investment, and whether it triggered the coding of one of four risk flags. These algorithms were used to process 6,357 collected adverts. Our work above showed there is still a role for human oversight when detecting and investigating high risk adverts. In order for any intervention by Meta to be effective, however, it was important that these could be detected at scale. The outcomes of our efforts are described in Section 5.

Summary

- In this study we set out a framework for identifying potential harmful adverts amongst those that have been published on Meta platforms (Facebook and Instagram) and show that this can be used to identify additional harmful adverts.

- We explore the scale and character of investment advertising which may pose a risk to consumers on Meta platforms, using the Meta Ad Library, a public repository of advertising visible to users of Meta’s products.

- Between October 2021 and August 2022 we collected and analysed 6,357 adverts shown to Meta platforms users in the UK which used language found to be used in adverts for scam investment products, or which contained a link to a known scam site.

- A sample of 1,064 of these adverts were manually coded, firstly as to whether they related to a financial investment opportunity, then as to the type of opportunity, and finally to assess the presence of specific factors considered to risk misleading consumers, building on the Financial Conduct Authority’s Financial Promotions Rules, and the Consumer Protection from Unfair Trading Regulations.

- This framework and manually coded dataset were then used to train a series of neural nets, designed to algorithmically determine whether an advert mentioned an investment, and involved one of our four risk flags. These algorithms were used to process the whole dataset of 6,357 adverts, to demonstrate the potential of automated methods to detect misleading and fraudulent investment adverts.

3. What investment opportunities are being advertised on meta platforms?

This section, and the one that follows, summarise the findings of our manual analysis of 1,064 adverts collected from the Meta Ad Library.

Firstly, we identified whether an advert was offering an investment-related opportunity, with 484 adverts judged to be offering something related to investment. These adverts ranged from recognisable brands offering pension products, to apparent ‘influencers’ offering trading tips. The next stage of our analysis was to understand the nature of the investment products and services being advertised on Meta platforms. This is essential to understand both the risks the adverts may pose to consumers, and the regulatory frameworks that govern them. This section summarises our findings.

Investment products, defined as offers which allow consumers to make an investment in an asset, may fall in or outside of the FCA regulatory perimeter depending on the nature of the asset. By contrast, we define investment services as those offers which do not offer specific assets to consumers, but instead provide information or support with investment decisions. These services too may be regulated, or not: investment ‘advice’ which offers a personalised recommendation is regulated, while ‘guidance’ which offers broader, non-personalised information about investment is not regulated (although may still be a breach of the CPRs, fraud or fraud-related laws), and can be offered by any organisation [53].

We found that our sample was approximately evenly split between adverts for investment products and investment services, with a small number where both a product and an advisory service appeared to be offered together [54].

Illustrative examples of adverts are incorporated throughout this, and the following chapter. These adverts have been reproduced with minimal editing to remove names and contact details where necessary. Any spelling or grammatical errors are true to the original. In each case, these adverts have been chosen as typical examples of the types of adverts described.

Adverts for investment products

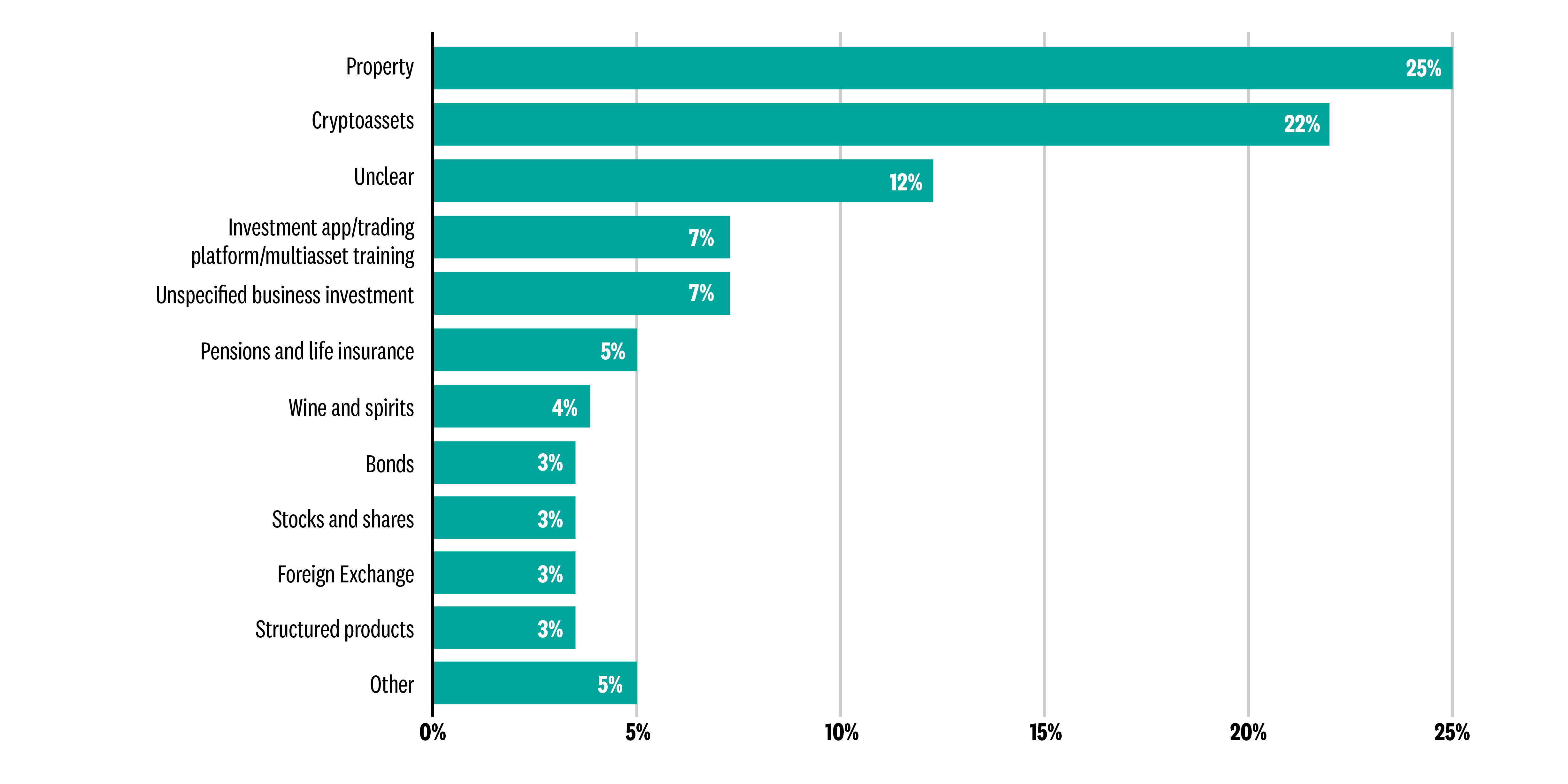

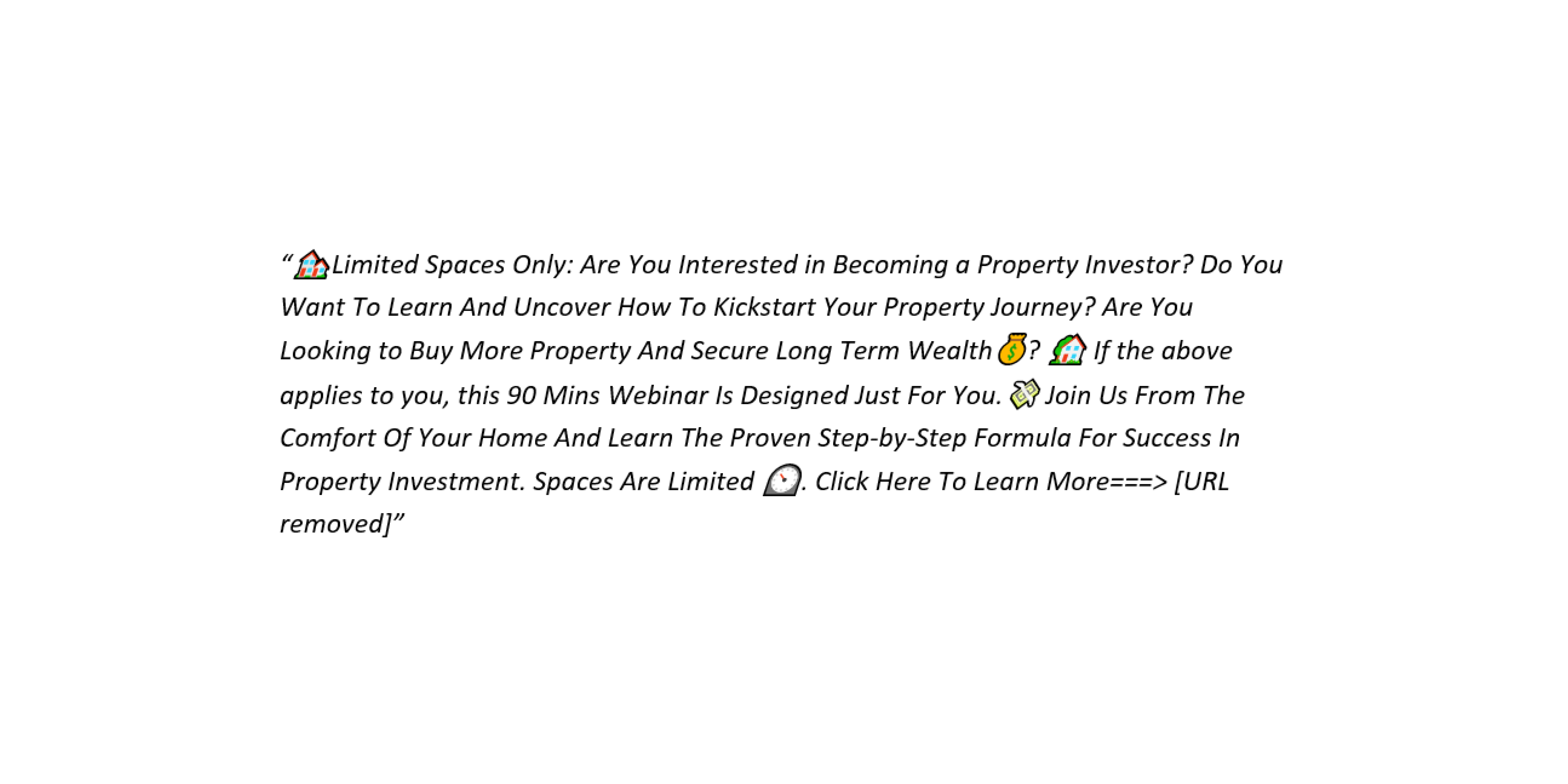

The most common investments advertised were properties, usually adverts for specific developments, both in the UK and overseas. These accounted for a quarter (25%) of product adverts in our sample.

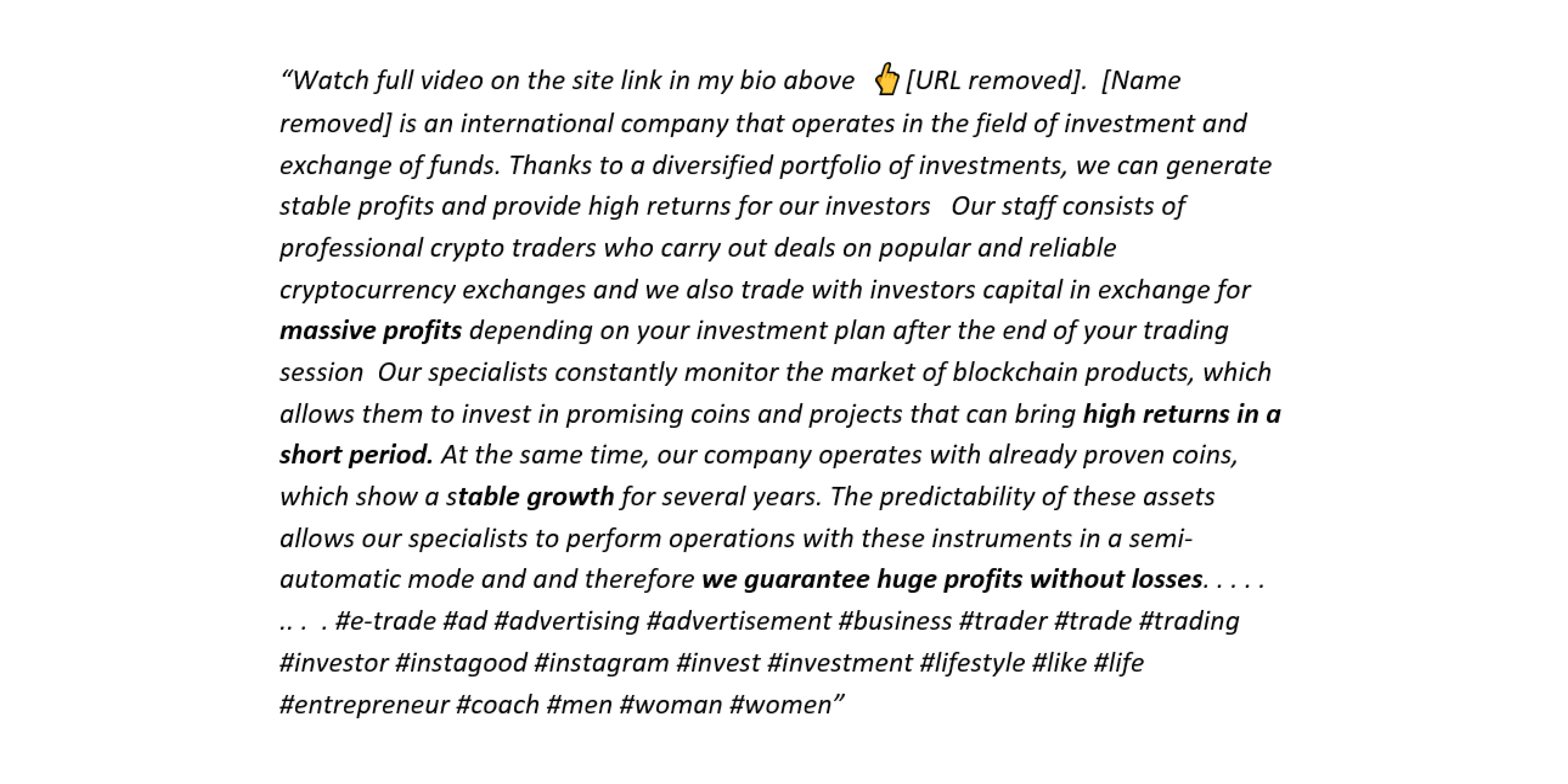

The next most common product-type advertised was cryptoassets (cryptocurrencies and non- fungible tokens (NFTs)), which accounted for more than a fifth (22%) of adverts, as illustrated in Figure 3.

Figure 3: Classification of investment products advertised on Meta platforms in our sample

The prevalence of adverts for cryptoassets is potentially a cause for concern given the complexity of these products, the lack of consumer protection and price volatility [55], demonstrated by the significant losses experienced by UK retail savers during the 2022 crypto crash [56]. More worrying still, cryptoassets are an increasingly common source of investment fraud, with UK consumers losing £160.7m to these scams between January and August 2022 [57]. Cryptoassets’ position outside of the regulatory system, and the anonymity inherent within these products make them a scammer’s dream, and mean they pose a serious risk to consumers.

A further cause for concern is that the third largest category in the sample were adverts where the nature of the product being advertised was unclear (12% of products advertised). As adverts were only coded as being a product where there was a clear ask for the consumer to send funds, this is particularly worrying. These ads were often those which offered high returns, without clarifying how those returns would be obtained, as in the example below.

This lack of clarity was also inherent in the 7% of adverts offering unspecified business investments, asking people to invest cash in a business without clarifying what the specific instrument for that investment was. Investment and trading apps and platforms also accounted for 7% of adverts. Other types of products advertised included physical assets, like wine, whisky and art, and financial assets like foreign exchange, stocks and shares, bonds and structured products.

We were also concerned to find three adverts for binary options, a form of trading banned in the UK in 2019 [58].

Given how recently they were banned, their technical name, and their obscurity to those unfamiliar with the financial world, consumers may believe these are legitimate financial investments, although the FCA clearly states that “any firm offering binary options services is probably unauthorised or a scam” [59]. Binary option adverts are also explicitly prohibited in Meta’s content guidelines as a specific example of “financial products and services that are frequently associated with misleading or deceptive promotional practices”, along with Initial Coin Offerings and contracts for difference trading [60].

This simple descriptive analysis shows that as many as half of the investment products in our sample of adverts are not regulated by the FCA, and in some cases may pose specific, recognised risks to consumers. Cryptoassets and commodities (including art, precious metals and wines and spirits) are not regulated, while most property advertisements appeared to be for single properties, which would also fall outside the regulatory perimeter (although collective investment schemes are regulated) [61]. This suggests that relatively high risk investment opportunities are being advertised to consumers through Meta platforms.

Adverts for investment services

As Figure 4 (below) illustrates, the most common type of investment services advertised in our sample were trading information, ‘tips’, or training of various kinds. Some offered this advice for a particular asset class, with cryptocurrencies being the most common (15%). In these cases, it was often difficult to tell whether these recommendations would be personalised, constituting regulated advice, or generic, meaning they are unregulated.

Other services offered tips specifically about trading foreign exchange or stocks and shares. A substantial proportion of adverts (21%) offered a more generic approach – either specifically offering tips around multiple assets, or not clarifying what assets they were offering tips about.

Figure 4: Classification of investment services advertised on Meta platforms in our sample

As in adverts for investment products, property loomed large among the adverts for investment services in our sample. More than a quarter of the adverts for investment services in our sample were also property related, usually advice on building a property investment portfolio.

Other services offered included trading algorithms or apps (coded as a service where it was not clear that these were linked to a platform actually holding funds and allowing trades to be executed), ‘wealth coaching’ services offering generic advice on how to get rich, and pensions and life insurance advice. Fewer services adverts were coded as ‘unclear’ as product adverts, often because these vague ads appeared to be offering wealth coaching. For example:

It is unclear how this service intends to actually improve the financial position of an individual, or what sort of strategies they will recommend, but it is clear that the intention is to make the individual wealthy.

Across both investment products and services, it is clear that adverts on Meta platforms are being used to encourage serious investments in assets ranging from the long-term (property and bonds) to highly novel and risky (cryptoassets). In the next section, we present the findings from our coding of advertisements, and explore the risks these adverts currently pose to consumers.

Summary

- We manually reviewed and coded 1,064 adverts drawn from the Meta Ad Library. Of these, 484 were found to be investment related. This sample was approximately evenly split between adverts for investment products, and investment-related services, for example advice and tips about investment.

- The most common investment products advertised in our sample were properties (25% of product adverts), and cryptoassets (22%). In most cases, both of these products will be unregulated.

- The third most common type of product advertisements in our sample were those where the specific product being offered was unclear.

- We found a small number of adverts for binary options, a form of trading banned in the UK in 2019.

- Advertisements for investment tips, training or advice were very common in the sample.

4. What risks do these adverts pose to consumers?

Our initial descriptive statistics, presented in Section 3, indicate the challenge that Meta and other platforms face in managing and moderating investment adverts online.

The range of investment products and services advertised is broad, and identifying which offers fall within the regulatory perimeter is a complex task, made more difficult by the limited information in adverts. Attempting to assess whether an advert is fraudulent from a short piece of text and some images is very challenging, particularly in investments, where the presentational difference between a genuine, unregulated, high-risk product and a scam may be invisible on the face of an advert, and only become apparent after further investigation.

This suggests that preventing fraudulent advertising at scale, as required by the Online Safety Bill, may be challenging. However the harm this fraud causes means we cannot shy away from this task.

Understanding the nature of the risks investment ads on social media platforms can pose is essential to helping us develop appropriate regulatory frameworks, both at a policy level and within platforms. In the next stage of our analysis, we tried to break down the problem by developing a set of indicators which attempt to assess the relative risk that different investment adverts can pose to consumers. Our intention is to provide a tool which helps to spot which adverts may pose a greater threat to consumers, either because, despite being genuine, they risk misleading, or because they are fraudulent. This tool does not seek to identify where an offence of fraud has been committed, but, together with other tools, it could help to identify adverts that are more likely to be scams. As such, this tool, and others like it for different types of products and services, could thus be useful in supporting the implementation of the Online Safety Bill.

Introducing our risk framework

Our coding framework was designed to explore whether investment advertisements on Meta platforms are providing sufficient information to consumers, in a clear way, to enable them to make informed decisions about investment products and services, and to identify where there are risks that consumers could be misled or misinformed. We hypothesise that adverts that attempt to scam consumers are more likely to mislead, for example by making grandiose promises, in order to encourage consumers to sign up for bogus offers.

This framework seeks to only assess the risk that an advert may mislead, or may be fraudulent; not the risk of the underlying investment product. A product may be high-risk, i.e. with a high likelihood of financial losses, but as long as the consumer is warned that there is risk involved the advertisement for that product would not be considered to be risky under our framework.

To build our framework, we drew on established regulations as a guide to potential elements of investment advertisements which, either by inclusion or omission, could mislead consumers; for example, the omission of a risk warning may leave consumers with the misplaced understanding that the product is not risky.

Specifically, we drew on the FCA’s Financial Promotions Rules (FPRs), which apply to products which are defined as regulated activities under the Financial Services and Markets Act 2000 [62], and the Consumer Protection from Unfair Trading Regulations (CPRs), which apply to all adverts, including those for unregulated financial products. While the FCA rules will not technically apply to many of the adverts in our sample, given the finding in Section 3 that many of the products advertised are unregulated, the expectations set out in the FPRs may nevertheless be viewed as good practice in communicating openly with consumers about investments, and so we suggest that they can be used more widely as a way to assess whether an investment advert may risk misleading a customer.

"Looking at individual risk factors in this way helps us understand the types of risks consumers are exposed to through these adverts, but does not give us an understanding of the harm actually created."

We identified four main ways in which investment adverts may mislead consumers:

- Not informing consumers of the risks involved in the proposed investment

- Suggesting returns on investments are guaranteed or will be much higher than is likely in reality.

- Not being clear about the nature or status of the investment proposed

- Using language that suggests an opportunity is time critical to create a sense of urgency.

All 484 investment-related adverts in our manual analysis sample were coded for the presence of these risk factors by researchers, using the 18-question coding framework in Annex A. The researchers focused on the advert description and images where available, reflecting the information immediately available to a consumer who is shown the advert.

This section describes the prevalence of each of these risk factors in our coded sample of adverts, explains how they are problematic, and offers examples of how they appear.

Risk factor 1: Not informing consumers about the risks involved in the proposed investment

Virtually all investments involve some risk – even sovereign nations can default on bonds. It is thus critical, in our view, that any advertisement for an investment product contains a risk warning, to avoid misleading consumers about the prospects of the product. Under the FCA financial promotions rules, this must also be given sufficient prominence, and provide enough information to allow prospective investors to make an informed decision [63].

However, in our sample of investment adverts on Meta platforms, we found risk warnings were scarce. Within our sample of investment-related adverts, we found that only 12% of adverts contained any sort of risk warning. The prevalence of risk warnings was only slightly higher, at 14%, when we looked solely at investment products. The low proportion of adverts with risk warnings present suggests that these advertisers are not taking even minimal steps to inform consumers of the risks they could face should they take up these opportunities, and thus risk misleading consumers.

Even looking at a subsample of adverts for investment products which we considered likely to be regulated (bonds, stocks and shares, investment apps, pensions, life insurance and structured products; sample size 83) we found that only one in three (33%) contained a risk warning. While we cannot be sure that all of these adverts would be covered by the Financial Promotions Rules, this suggests that there may be violations of these regulations within our dataset.

While not warning consumers that investments are risky causes concern, it is even more worrying that some adverts made statements to suggest that investments were not risky, or were ‘risk-free’. Overall, we found that 8% of adverts in the sample made explicit statements of this nature, rising to one in 10 (10%) of adverts for investment products.

Additionally, we found that 9% of investment adverts in the sample claimed that the capital deposited was not at risk. While complex contracts can be drawn up to protect capital, these are unusual and without these in place, these statements are likely to be misleading to consumers, and may indicate that the offer is fraudulent.

Even where ads did not suggest that investments were risk-free, consumers could still be misled about how risky the opportunity is. We found that 15% of adverts included statements which could be seen as playing to the consumers’ peace of mind, reassuring them, or suggesting that there is nothing to lose. This may mislead consumers about the true risks of the opportunity.

Each of these factors in an advert increases the likelihood that consumers are misled or not properly informed about the risks involved in taking up an investment-related offer, preventing them from making informed decisions.

Risk factor 2: Suggesting returns on investments are guaranteed or will be much higher than is likely in reality

Just as investments inevitably involve risk, very few products can guarantee returns with any significant level of certainty (the exception being some government bonds, bank savings accounts or certificates of deposit and annuities). Other assets, like corporate bonds, may offer a higher degree of certainty than stocks and shares, but these entities can be dissolved meaning returns vanish. We think, therefore, that to avoid being misleading, most investment adverts should leave consumers with the clear impression that returns could vary to avoid being misleading.

In our sample, we found that 13% of adverts were claiming that returns were guaranteed, either in words or by promising a fixed amount or percentage without any indication that this may not be achieved in practice. This rose to 19% among advertisements for investment products, and was still 12% among those products likely to be regulated.

The danger of these advertisements is brought home by those promising huge returns and no losses from investments in cryptocurrencies. With many cryptoassets dramatically falling in value in recent months, a consumer who trusted a ‘guarantee’ may now be facing significant losses.

Figure 5 offers another example, in this case promising fixed returns depending on the sum invested, with no clarity about how these returns will be achieved.

Figure 5: Example advert found on Meta’s Ad Library

The FCA specifies that a promotion should not describe a feature of a product or service as ‘guaranteed’ unless this is capable of being achieved, and is not misleading. Arguably this should also be the case for non-regulated investment promotions, if they are not to risk misleading a customer. While we cannot know without further investigation whether the promises made in specific advertisements are true, the prevalence of these claims in our samples invites some suspicion.

A substantial number of adverts were also found to make dramatic claims about the nature of the returns that could be expected from the investment. Nearly one in five adverts promised “massive” returns or suggested that they could be life changing, for example allowing the investor to live on the returns as a form of passive income, removing the need to work, or making someone a millionaire.

These types of claims were more common among adverts for investment services, with more than a quarter (28%) of these adverts found to make strong claims about potential returns.

While we cannot be certain about the likely return of any of these schemes, the FCA requires that promotions offer a “balanced impression of both the short and long-term prospects for the investment” [64]. While many of these promotions are not regulated, this could nonetheless be seen as a reasonable step to take to minimise the risk of misleading customers. The focus on positive, and sizable, rewards for investments in adverts in our sample, particularly alongside a lack of risk warnings or mentions of potential losses, is thus a cause for concern.

Risk factor 3: Not being clear about the nature or status of the investment proposed

Understanding the type of investment being made is critical to allowing the consumer to reach an informed understanding of the risks involved and whether this matches their preferences. For this reason, we would expect adverts for investments to be clear about what is involved.

However, a significant number of the adverts in our sample were judged to be vague about the nature of the investment opportunity and how returns would be realised. Four in ten (40%) of the ads were coded as being vague, with this being slightly higher among adverts for investment services, and slightly lower (but still 38%) among products.

The level of vagueness varies among these adverts. Some are clear that they are offering a particular type of product or advice, but with no specifics about how that will create returns for the consumer. This cryptocurrency advert, for example, is unclear about which specific assets will provide these remarkable returns, and although it claims the category is ‘mining’, hashtags then mention a range of other assets. It is unclear whether this is to generate traffic, or if these assets are also involved in the offer.

Other adverts, while implying they are selling a specific product with set returns, offer no details as to the nature of this product.

Others are completely vague, appealing to the consumer’s desire to make money rather than any specifics of their product or service.

With each of these adverts, it is difficult to understand what exactly is being offered to the consumer. The last, despite promising significant returns, is completely opaque about what mechanism will generate them. This makes it very difficult for the consumer to understand the nature of the offer, and to assess its suitability for their needs.

While in some cases vagueness may be inevitable in a short advertisement, clarity about whether a product or service is regulated or not is one simple way to help consumers understand the likely risks.

The FCA requires that regulated financial promotions name the relevant regulator, or clarify that the products are not regulated [65]. Very few adverts in the sample mentioned regulation one way or another, with only 2% of adverts, including 3% of those for products, suggesting that the offer was regulated. Allowing for the number of regulated products in the sample being small, this still suggests compliance with this requirement is low.

Even more worrying, we found a small number of adverts which appear to be making false claims to be regulated. All firms suggesting they were regulated were checked against the registers named, and two cases were identified where firms claiming to be regulated could not be identified on the register. This is a banned practice under Schedule 1 of the Consumer Protection from Unfair Trading Regulations 2016, as it is highly likely that suggesting a product is regulated when this is not, in fact, true, would mislead a customer.

Another tactic judged to be present in 19% of our sample of adverts was making favourable comparisons between the product advertised and other investment opportunities. In many cases, these statements suggested that a firm was the best, or a leader in the market, without any clarity on how this judgement was being made.

Figure 6: Vague advert for a ‘crypto investment company’ found on Meta’s Ad Library

Again, this may risk misleading the consumer about the nature of the opportunity. The FCA says that comparisons must be “meaningful and presented in a fair and balanced way” [66]. While the promotions making these comparisons may not be subject to these regulations, arguably the way in which these comparisons are made, without balance, could risk misleading investors.

Together, each of these factors could reduce the likelihood that a consumer is able to form a genuine impression of the investment opportunity from the advertisement, reducing their ability to understand the likely costs and benefits to reach an informed decision.

Risk factor 4: Using language that suggests an opportunity is time critical to create a sense of urgency

Vague statements or information which obscures the nature of an investment opportunity make it difficult for consumers to effectively assess the risks involved. However, advertisers can also influence customer behaviour more subtly in the statements they make about the urgency of the opportunity and its availability. Such practices were common in the dataset.

More than one in ten of our sampled investment adverts were identified as suggesting that the opportunity was time limited, or introducing a sense of urgency by using language like “don’t miss your chance”.

This could give consumers an impression of scarcity, which may interact with a consumer’s loss aversion to increase the attractiveness of a product; we feel the effect of losses more than gains, so the fear of missing out can be a powerful motivator. Worryingly, adverts for investment products where consumers were being asked to commit funds, were more likely to use these tactics, with 16% of these adverts identified as introducing a sense of urgency.

How risky are these adverts?

Our coding flags characteristics of adverts which risk misleading a consumer, mostly through a lack of clear, balanced information, or through potential emotional manipulation preying on behavioural biases. However, it is not possible to say what impact these would have on consumer behaviour in any specific case. Moreover, with many of the adverts in our sample not falling within the FCA’s regulatory perimeter, we cannot say that there is wrongdoing here. Looking at individual risk factors in this way helps us understand the types of risks consumers are exposed to through these adverts, but does not give us an understanding of the harm actually created.

However, an advert which demonstrates several of these risk factors may reasonably be considered to pose a greater risk to consumers, either by misleading, or by being fraudulent. While we cannot be certain which adverts in our sample are genuine and which are fraudulent, we believe that those adverts which raise a higher number of these risk flags may be more likely to be scams, on the basis that those intending to defraud consumers are more likely to seek to accelerate a transaction, to imply that the transaction is low risk, and to seek to reassure the consumer, or to tempt them with the promise of life-changing returns into taking a risk much larger than they usually would.

To assess the cumulative risk posed to consumers, we can look across our coding framework, providing an assessment of the overall level of risk associated with each advert. To achieve this, we split our coding questions into two categories (see below), those that are ‘red flags’, where, in our judgement, the advert is missing information that is essential to allowing a consumer to properly judge risk, or includes content which has a high risk of being misleading. ‘Amber flags’ are used to show characteristics that may, in some circumstances, pose a risk to a consumer, but where the risk is not as clear cut.

Red and amber flags in investment related adverts

Red flags:

- No risk warning.

- Claiming returns are guaranteed.

- Claiming capital is secure.

- Claiming that the investment is not risky or risk-free.

- Sensationalising the benefits of investing, for example promising ‘massive’ or life-changing returns.

- Suggesting the opportunity is time limited or creating a sense of urgency.

- Falsely claiming the product or service is regulated.

- Playing to the consumer’s peace of mind.

- Making statements that may scare the consumer into investing.

Amber flags:

- Making positive comparisons to other opportunities

- Being vague about how value will be generated

- Failing to clarify whether the offer is regulated

Using this approach in our sample of 484 manually-coded investment adverts, we identified 89 adverts with three or more red flags, of which 23 had five or more red flags. These adverts were among the most concerning in the dataset, and are, in our judgement, likely to cause consumer detriment. For example, this approach successfully identifies this advert, offering illegal binary trading products:

This advert combines promises of complete safety, incredibly high returns over very short time periods, with an encouragement to sign up immediately. While we cannot know for certain that this is fraudulent on the face of the advert, the presence of several risk factors creates an extremely high likelihood that it could be. This is therefore a worthy candidate for further investigation to protect consumers.

Challenges and reflections

Our manual coding suggests that significant numbers of investment adverts on Meta platforms have characteristics that risk misleading consumers, and which may indicate that adverts are more likely to be fraudulent. However, identifying them is a challenge. Even our experienced consumer researchers found coding adverts that were often vague a challenging task, and reconciliation of coding required many hours’ work. We recognise that, practically, assessing all adverts on Meta or similar platforms in this way is not likely to be feasible.

And, even once coding is completed, risk factors alone do not help us differentiate risky legitimate propositions from fraudulent ones. This approach is not a panacea. But a better, more granular understanding of the risk factors present in investment advertisements may help to develop automated systems which help to identify those adverts which pose the greatest risk of being misleading and fraudulent. This, alongside earlier prevention techniques including due diligence at account entry level, will help triage risky adverts for human assessment. In the next section, we explore this possibility.

Summary

- The diversity of investment adverts, and the existence of legitimate, unregulated and high risk promotions alongside fraudulent ones, make preventing fraudulent advertising at scale difficult.

- Understanding the ways in which investment adverts can potentially mislead consumers can help us identify those that pose the greatest risk.

- Drawing on existing regulation, we identified four main ways in which investment adverts may potentially mislead consumers:

- Not informing consumers of the risks involved in the proposed investment.

- Suggesting returns on investments are guaranteed or will be much higher than is likely in reality.

- Not being clear about the nature or status of the investment proposed.

- Using language that suggests an opportunity is time critical to create a sense of urgency

- Our human analysts coded 484 investment adverts by hand for the presence of these risk factors.

- Looking at the number of these flags raised by each advert provides us with a way of assessing the potential risk they pose to a consumer. While we can’t be certain without further investigation which adverts are fraudulent, those raising many flags are likely to pose a higher risk, as fraudsters try to tempt their victims by minimising concerns about risk, making dramatic promises and accelerating the transaction as fast as possible.

- This approach to identifying risk provides a tool that can be useful for the implementation of the Online Safety Bill.

5. Detecting risky advertising at scale

The sale and display of advertising is a major driver of revenue for Meta - company reports suggest a revenue of over $28 billion from advertising in the second quarter of 2022 [67]. Our analysis above is based on a small, manual sample of adverts shown to people in the UK, and shows that careful human investigation must continue to play a role in making the call about whether or not an advert is likely to pose a risk to people. However, the sheer scale of Meta’s platforms means that using human detection alone is likely to be prohibitively slow and expensive. Effectively protecting consumers will involve some form of automated detection of adverts likely to raise red flags.

Meta is already a heavy user of automated detection systems – their terms of service explain that their ad review process ‘relies primarily on automated tools to check ads’ [68]. We wanted to test whether using an algorithm to detect adverts flagged through our risk framework was a realistic possibility. To do so, we trained a series of algorithms to detect adverts corresponding to the risk flags laid out above. We found that, while imperfect, automated detection models were effective in flagging high risk adverts within our dataset, and could play a key role in keeping consumers safe.

Our approach

To test whether automatic labelling might help detect high-risk content, we trained a series of algorithms called ‘neural nets [69]’. There are many uses for this technology, but in our case these can be thought of as computer programs which can be taught to make decisions about data – for example, whether an advert contains language which implies an investment opportunity is time limited – which you’d traditionally need a human analyst to make. To make these distinctions, the model is ‘trained’ on a reliable dataset coded by humans. It then works out a set of rules which it can apply to new, unseen data.

The advantage of this approach is that it allows analysts to label data at scale. By training on a small coded sample, a neural net can be used to classify a much larger dataset which would take too long to label by hand; for example, every advert shown to UK Meta users. Models were trained for this experiment in two stages:

Relevance

The first classifier labelled adverts according to whether they were relevant to investment – this was used to remove adverts which, for example, described university courses as ‘investing in your future’. This classifier analysed the following features of an advert in making a decision:

- The free-text ‘description’ field shown to consumers.

- Any emoji used within this advert’s description field.

- The free-text ‘page info’ field used to describe the page which owned the advert.

Risk flags

Adverts labelled as relevant in the first stage of classification were then used to train a set of four classifiers, one for each of the following risk flags:

- Adverts sensationalising the benefits of investing, for example promising ‘massive’ or life changing returns.

- Adverts claiming returns are guaranteed.

- Adverts suggesting the opportunity is time limited or creating a sense of urgency.

- Adverts playing to the consumer’s peace of mind.

These flags were chosen as they represented the four most prevalent labels in the dataset, meaning there was more data for the algorithm to learn from. In addition to the fields analysed above, risk classifiers also took into account a few features which were found to be salient during the manual coding – namely:

- The percentage of an advert’s description written in capital letters, which often indicated an emotive attempt to generate a sense of urgency.

- The number of likes or followers on the advertiser’s page, which helped flag pages which had been very recently created or had not gathered any attention.

- The industry which a page described itself as belonging to. We found that much of the most highly suspect content was posted on pages with a description which had nothing to do with investment, such as ‘animals’ or ‘gardening’.

The collection and labelling process was constructed as illustrated in Figure 7. This method only used flags in the content of the advert and does not include flags from due diligence checks or account behaviour, which could be applied by Meta.

Figure 7 - Classifier architecture

Performance

Automated classification is a probabilistic process, and the classifiers trained for this project are imperfect tools. They varied in effectiveness and, while on a straight score of accuracy they obtain between 77% and 91%, detailed performance scores tell us that each will miss adverts which should be coded as risky, and may get the call wrong on flags it does raise. Overall performance is outlined below, with full metrics and a detailed description of the training process provided in the technical annex.

This varying accuracy is one of the reasons why human oversight remains important: our human coders often found it difficult to agree on how to code adverts manually, given the limited and often vague information in adverts, which limits clear interpretation. This shows the importance of informed judgement in detecting these adverts which places limits on what we can expect machines to achieve.

Following the development of the framework, we wanted to test whether automatic labelling could help human teams filter the vast quantity of content published on Meta platforms, by helping to flag adverts likely to pose a high risk to consumers. To test this, a dataset of 6,357 adverts was processed through the system illustrated above. The system applied at least one risk flag to 186 of these, and to test this output, 100 of the flagged adverts were reviewed by humans, starting with those raising the highest number of flags.

We found that, even using classifiers at lower accuracy, this approach was extremely effective in surfacing advertisements likely to be risky. When human analysts reviewed what the automated system had flagged they confirmed,

- 22% raised eight of the serious risk flags described in the framework.

- 57% raised three or more, and

- 91% raised at least one.

This leaves 9% incorrectly flagged.

When humans alone measured a different sample of 1,319 adverts against the framework questions:

- 0.9% received 8 flags.

- 12.6% raised three or more.

- 43% raised at least one.

This experiment demonstrates that machine filtering using our framework can greatly increase the proportion of adverts which raise risk flags within any given sample to then be reviewed by human intervention, as illustrated in Figure 8. This has the potential to increase timeliness and accuracy of takedowns.

Figure 8: Percentage of adverts with multiple risk flags in initial sample of 1,319 adverts, without machine processing

Figure 9: Percentage of adverts with multiple risk flags within 100 adverts labelled with at least one risk flag by our classifiers

If applied by Meta, we believe this approach is likely to enable risky content we’re concerned about to be flagged to human coders and rejected before it is published. It could also help those combatting scams to better monitor emergent tactics.

Case study: TESLER

One collection of adverts flagged by the algorithms trained to identify high-risk content used extremely similar description text, often focused around a piece of software called ‘Tesler’. When coded by humans, 20 adverts for Tesler each raised eight separate serious risk flags, making these adverts some of the most-flagged content in our collection. Examining the wider dataset, we found that similar adverts were scattered throughout our data collection. As the examples below show, these adverts seem to be minor variations on a common theme – copied and pasted in a way which does not always make sense.

Figure 10: Image included in an advert for Tesler, posted on a page called ‘Description’ on 16 August 2022

The use of the name ‘TESLER’, with its similarities to automotive brand ‘Tesla’, together with mentions of a ‘programming genius’ and ‘technological revolution’ – both concepts consumers may associate with the innovative car manufacturer – appear to reinforce this potentially misleading reference.

One advert linked to a Google-generated website, which uses the branding of the established investment magazine Forbes, and appears to endorse the product. When Which? approached Forbes, they confirmed that they had never endorsed or had any connection with Tesler. In 2021, the FCA issued a warning about a scam investment company using the brand name ‘Tesler’ and impersonating a regulated trading company based in the UK [70]. While we cannot be certain these adverts are from the same group, we were also unable to find any evidence of a real, registered company called ‘Tesler Investments’. Some of the adverts also offer binary trading products, which were banned from sale in the UK by the FCA in 2019.

When a Which? researcher clicked through on a Tesler ad, they were prompted to enter their contact details. Within less than an hour, they were called by a representative of the organisation and pressured to set up a trading account amid claims that its “sophisticated algorithm ... plays the trade with an 87% success rate”.

Together, these factors suggest that these Tesler adverts are likely to be a scam.

The Tesler adverts in our dataset hit several of our risk flags, including suggesting profits are guaranteed, minimising the apparent risk of the project by suggesting it offers “only profitable trades” and encouraging consumers to sign up immediately. While only one case study, this provides initial validation for our proposal that identifying and analysing risk flags can help to identify misleading and potentially fraudulent adverts.

We collected 39 unique advertisements mentioning Tesler between 16th November 2021 and 24th Mar 2022; searches conducted at time of writing confirm that adverts for Tesler can still be found on Meta in November 2022. These adverts were posted by 28 different pages, some of which had been given names entirely unrelated to investing, such as ‘ABC News GB’, ‘Shane Young MMA’ and ‘Butterfly Planet’. Many of these pages are still active, and appear to be innocuous pages based around food or fashion. As most are no longer running ads, it’s impossible to see from the pages themselves that they were recently advertising an investment platform.

Figure 11 – The page facebook.com/Cooking, which describes itself as a cooking school, but posted an advertisement for the Tesler platform on 12 January 2022

The variety of pages posting these advertisements, and the period and frequency with which they’re posted, suggest a coordinated campaign to disseminate risky adverts, potentially attempting to reach a wider audience through tapping into topics which people are already interested in, such as food or news.

Challenges and caveats

We faced some significant challenges in training these algorithms:

Quantity of annotated data

The primary challenge faced in training models for this project was the small size of our coded dataset. The smallest risk category trained on had only 88 positive examples – 16% of all the relevant adverts. While a number of mitigations were tested in order to make up for this, such as increasing the ‘weight’ of positive examples, these were only found to be useful for some models.

The obvious way to deal with this is to iteratively expand the coded dataset over time, bringing in coding of newly collected adverts flagged as relevant by the models, as well as sampling from newly posted adverts, and adding these to the training dataset. This is something Meta would be in a position to do were a similar system to be implemented on its platform. This is likely not only to increase the accuracy of each model, but also refresh its ability to detect misleading and fraudulent content as the tactics used by scammers evolve. This should allow the process to continue evolving as fraudsters adapt their tactics over time.

Precision and recall

In order to effectively highlight data which deserved a risk flag, models were tuned for precision. This measure, also called ‘specificity’, tells you how many of the adverts flagged as risky by the classifier were also flagged as risky by humans. We chose to optimise for precision in risk flags, as it was crucial this system was better than a random sample in increasing the percentage of risky adverts shown to platform moderators.

While precision for the relevance classifier was high, at 80%, precision was more variable for the risk models, which got between 42% and 100% of their labelling right.

Table 13 - accuracy, precision and recall in models trained for this project

| Accuracy | Precision | Recall | |